|

Point Data Documentation

|

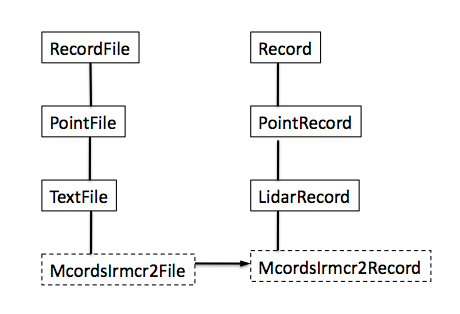

This page describes how to develop a new point data file reader using the RAMADDA point data framework. This framework is based around a record file reading framework. To provide support for a new point data format all that is required is to define a new Java File class that can create the Record class that knows how to read a record from the file.

svn checkout svn://svn.code.sf.net/p/ramadda/code/ ramadda-codeThe point data file reading code is in:

src/org/ramadda/data/pointThe point data plugin is in:

src/org/ramadda/geodata/point

# PBO H2O Data Portal - http://xenon.colorado.edu/portal2000, 1, 15, 15, 0.4, 0.2, -2.5 2000, 2, 15, 46, 0.6, 0.2, -3.4 2000, 3, 15, 75, 0.7, 0.1, -4.7 2000, 4, 15, 106, 0.8, 0.3, -4.5 2000, 5, 15, 136, 0.7, 0.2, -3.3 2000, 6, 15, 167, 0.3, 0.2, -2.0 2000, 7, 15, 197, -0.0, 0.1, -0.8 2000, 8, 15, 228, -0.2, 0.0, 0.3 2000, 9, 15, 259, -0.2, -0.1, 0.4 2000, 10, 15, 289, -0.2, -0.0, -0.3 2000, 11, 15, 320, -0.2, 0.1, -2.3 2000, 12, 15, 350, 0.0, 0.2, -2.8 2001, 1, 15, 15, 0.2, 0.3, -3.4 2001, 2, 15, 46, 0.3, 0.3, -4.0 2001, 3, 15, 74, 0.3, 0.2, -4.4 2001, 4, 15, 105, 0.4, 0.2, -3.8 2001, 5, 15, 135, 0.3, 0.1, -2.4 2001, 6, 15, 166, 0.2, 0.1, -1.0 2001, 7, 15, 196, -0.1, -0.1, 0.2 2001, 8, 15, 227, -0.2, -0.2, 0.8 2001, 9, 15, 258, -0.3, -0.2, 1.1 2001, 10, 15, 288, -0.3, -0.1, 1.2 2001, 11, 15, 319, -0.1, -0.1, 0.8 2001, 12, 15, 349, 0.1, -0.1, -0.3 2002, 1, 15, 15, 0.2, -0.1, -0.6 2002, 2, 15, 46, 0.3, -0.0, -0.7 2002, 3, 15, 74, 0.4, -0.0, -0.6 2002, 4, 15, 105, 0.4, 0.0, -0.2 2002, 5, 15, 135, 0.3, -0.0, 0.5 2002, 6, 15, 166, 0.1, -0.1, 1.5 2002, 7, 15, 196, -0.2, -0.2, 2.3 2002, 8, 15, 227, -0.3, -0.2, 2.7 2002, 9, 15, 258, -0.3, -0.2, 2.0 2002, 10, 15, 288, -0.3, 0.1, 1.3 2002, 11, 15, 319, -0.2, 0.2, 0.7 2002, 12, 15, 349, -0.1, 0.2, 0.2 2003, 1, 15, 15, 0.1, 0.2, -0.3 2003, 2, 15, 46, 0.3, 0.1, -0.7 2003, 3, 15, 74, 0.3, 0.2, -1.2 2003, 4, 15, 105, 0.3, 0.1, -0.9 2003, 5, 15, 135, 0.3, 0.1, -0.4 2003, 6, 15, 166, 0.0, 0.1, 0.8 2003, 7, 15, 196, -0.3, 0.0, 1.8 2003, 8, 15, 227, -0.5, -0.1, 2.5 2003, 9, 15, 258, -0.5, -0.0, 2.4 2003, 10, 15, 288, -0.4, 0.0, 2.4 2003, 11, 15, 319, -0.3, 0.1, 1.9 2003, 12, 15, 349, -0.1, 0.1, 1.2 2004, 1, 15, 15, 0.1, 0.1, 0.5 2004, 2, 15, 46, 0.2, 0.1, 0.1 2004, 3, 15, 75, 0.2, 0.1, -0.4 2004, 4, 15, 106, 0.1, 0.2, -0.1 2004, 5, 15, 136, 0.1, 0.2, 0.6 2004, 6, 15, 167, -0.0, 0.2, 1.2 2004, 7, 15, 197, -0.3, 0.2, 1.8 2004, 8, 15, 228, -0.4, 0.1, 2.4 2004, 9, 15, 259, -0.4, 0.1, 2.2 2004, 10, 15, 289, -0.3, 0.1, 1.3 2004, 11, 15, 320, -0.2, 0.2, -0.5 2004, 12, 15, 350, -0.0, 0.3, -1.4 2005, 1, 15, 15, 0.0, 0.2, -2.9 2005, 2, 15, 46, 0.0, 0.2, -3.8 2005, 3, 15, 74, 0.1, 0.1, -3.7 2005, 4, 15, 105, 0.2, 0.0, -3.3 2005, 5, 15, 135, 0.3, -0.1, -2.7 2005, 6, 15, 166, 0.2, -0.1, -1.8 2005, 7, 15, 196, -0.1, -0.2, -0.1 2005, 8, 15, 227, -0.4, -0.3, 0.6 2005, 9, 15, 258, -0.4, -0.3, 0.9 2005, 10, 15, 288, -0.3, -0.2, 0.4 2005, 11, 15, 319, -0.2, -0.2, 0.0 2005, 12, 15, 349, 0.0, -0.1, -0.3 2006, 1, 15, 15, 0.3, -0.3, -1.1 2006, 2, 15, 46, 0.4, -0.2, -1.4 2006, 3, 15, 74, 0.5, -0.2, -1.7 2006, 4, 15, 105, 0.6, -0.3, -1.9 2006, 5, 15, 135, 0.4, -0.3, -0.8 2006, 6, 15, 166, 0.1, -0.4, 0.5 2006, 7, 15, 196, -0.3, -0.4, 1.6 2006, 8, 15, 227, -0.5, -0.4, 2.0 2006, 9, 15, 258, -0.6, -0.3, 1.9 2006, 10, 15, 288, -0.5, -0.1, 1.1 2006, 11, 15, 319, -0.3, -0.1, 0.7 2006, 12, 15, 349, -0.1, -0.0, 0.2 2007, 1, 15, 15, -0.0, 0.1, -0.7 2007, 2, 15, 46, 0.0, 0.0, -1.0 2007, 3, 15, 74, 0.2, -0.0, -0.9 2007, 4, 15, 105, 0.2, 0.1, -0.8 2007, 5, 15, 135, 0.1, 0.1, -0.0 2007, 6, 15, 166, -0.1, 0.0, 1.1 2007, 7, 15, 196, -0.4, -0.1, 2.2 2007, 8, 15, 227, -0.6, -0.2, 2.6 2007, 9, 15, 258, -0.6, -0.2, 2.7 2007, 10, 15, 288, -0.4, -0.2, 2.2 2007, 11, 15, 319, -0.2, -0.1, 2.0 2007, 12, 15, 349, -0.0, -0.0, 0.7 2008, 1, 15, 15, 0.1, -0.0, -0.4 2008, 2, 15, 46, 0.3, -0.0, -1.1 2008, 3, 15, 75, 0.4, 0.0, -1.3 2008, 4, 15, 106, 0.4, 0.1, -0.6 2008, 5, 15, 136, 0.2, 0.0, 0.3 2008, 6, 15, 167, 0.1, -0.0, 1.0 2008, 7, 15, 197, -0.3, -0.1, 2.2 2008, 8, 15, 228, -0.5, -0.1, 2.7 2008, 9, 15, 259, -0.5, -0.0, 2.4 2008, 10, 15, 289, -0.4, -0.0, 2.1 2008, 11, 15, 320, -0.1, -0.0, 1.6 2008, 12, 15, 350, -0.0, 0.0, 1.0 2009, 1, 15, 15, 0.2, 0.0, -0.1 2009, 2, 15, 46, 0.3, 0.0, -0.7 2009, 3, 15, 74, 0.5, -0.0, -0.7 2009, 4, 15, 105, 0.5, 0.1, -0.5 2009, 5, 15, 135, 0.4, 0.1, 0.1 2009, 6, 15, 166, 0.2, 0.0, 0.8 2009, 7, 15, 196, -0.1, -0.1, 1.7 2009, 8, 15, 227, -0.3, -0.1, 2.5 2009, 9, 15, 258, -0.4, -0.1, 2.7 2009, 10, 15, 288, -0.3, 0.0, 2.1 2009, 11, 15, 319, -0.1, 0.1, 1.6 2009, 12, 15, 349, 0.0, 0.2, 1.0 2010, 1, 15, 15, 0.2, 0.1, -0.1 2010, 2, 15, 46, 0.1, 0.1, -1.4 2010, 3, 15, 74, 0.2, 0.0, -1.6 2010, 4, 15, 105, 0.3, -0.0, -1.1 2010, 5, 15, 135, 0.3, -0.0, -0.5 2010, 6, 15, 166, 0.2, -0.1, 0.3 2010, 7, 15, 196, -0.2, -0.1, 1.4 2010, 8, 15, 227, -0.4, -0.2, 2.2 2010, 9, 15, 258, -0.3, -0.2, 2.4 2010, 10, 15, 288, -0.3, -0.2, 2.1 2010, 11, 15, 319, -0.1, -0.3, 1.5 2010, 12, 15, 349, 0.2, -0.4, 0.2 2011, 1, 15, 15, 0.4, -0.4, -1.4 2011, 2, 15, 46, 0.6, -0.4, -1.7 2011, 3, 15, 74, 0.7, -0.4, -2.3 2011, 4, 15, 105, 0.8, -0.5, -2.2 2011, 5, 15, 135, 0.8, -0.4, -1.9 2011, 6, 15, 166, 0.7, -0.4, -1.2 2011, 7, 15, 196, 0.2, -0.4, 0.3 2011, 8, 15, 227, -0.1, -0.5, 1.4 2011, 9, 15, 258, -0.2, -0.5, 1.9 2011, 10, 15, 288, -0.2, -0.4, 1.6 2011, 11, 15, 319, -0.0, -0.3, 1.3 2011, 12, 15, 349, 0.0, -0.2, 0.9 2012, 1, 15, 15, 0.2, -0.2, 0.5 2012, 2, 15, 46, 0.3, -0.2, -0.0 2012, 3, 15, 75, 0.3, -0.3, -0.1 2012, 4, 15, 106, 0.3, -0.3, 0.2 2012, 5, 15, 136, 0.2, -0.3, 1.1 2012, 6, 15, 167, 0.0, -0.4, 2.2 2012, 7, 15, 197, -0.3, -0.6, 3.1 2012, 8, 15, 228, -0.5, -0.6, 3.5 2012, 9, 15, 259, -0.5, -0.6, 3.6 2012, 10, 15, 289, -0.5, -0.5, 3.4 2012, 11, 15, 320, -0.2, -0.4, 3.0 2012, 12, 15, 350, -0.0, -0.5, 2.3 2013, 1, 15, 15, 0.0, -0.3, 1.6 2013, 2, 15, 46, 0.1, -0.3, 1.3 2013, 3, 15, 74, 0.2, -0.2, 1.2 2013, 4, 15, 105, 0.2, -0.1, 1.3

- created 09-Feb-2014

- cast 39.191022 249.322687 2245.0 / station Lat. Lon. Elev.(m)

- Product Release Version 1.1

- Loading Model

- The monthly GLDAS loads were computed by Tonie van Dam's group

- at the University of Luxembourg

- Month, Day, and DOY are provided to make it easier to plot the loads

- Year, Month, Day, Dayofyear, North Load(mm), East Load(mm), Vert Load(mm)

header.standard=true

- The header is the standard variable number of "#" commented lines

fields=Latitude,Longitude,Elevation,Year, Month, Day, Dayofyear, North_Load[1], East_Load[2], Vert_Load[3]

- The fields - note Latitude,Longitude and Elevation have default values

- extracted from the header with the below pattern definitions

field.Latitude.pattern=#\s*cast\s*([4]+\.[5]+)\s+ field.Longitude.pattern=#\s*cast\s*[6]+\.[7]+\s+([8]+\.[9]+)\s+ field.Elevation.pattern=#\s*cast\s*[10]+\.[11]+\s+[12]+\.[13]+\s+([14]+\.[15]+)\s+

- The pattern attribute is a regular expression used to extract the value from the header

The code is at:

src/org/ramadda/data/point/amrc/AmrcFinalQCPointFile.javaThere is an example file:

src/org/ramadda/data/point/amrc/exampleamrcqc.txtThe plugin is defined in:

src/org/ramadda/geodata/point/amrc/amrctypes.xmlTo run this from the command line (assuming you've installed the pointtools):

pointchecker.sh -class org.ramadda.data.point.amrc.AmrcFinalQCPointFile exampleamrcqc.txt

Or if you have your environment set up:

java org.ramadda.data.point.amrc.AmrcFinalQCPointFile exampleamrcqc.txt

The AmrcFinalQCPointFile class reads the final QC'ed text file format:

Year: 2001 Month: 09 ID: BPT ARGOS: 8923 Name: Bonaparte Point Lat: 64.78S Lon: 64.07W Elev: 8m 2001 244 9 1 0000 -2.5 444.0 0.2 110.0 444.0 444.0 2001 244 9 1 0010 -2.5 444.0 0.2 114.0 444.0 444.0 2001 244 9 1 0020 -2.5 444.0 0.2 110.0 444.0 444.0 2001 244 9 1 0030 -2.5 444.0 0.0 0.0 444.0 444.0 2001 244 9 1 0040 -2.5 444.0 0.0 0.0 444.0 444.0This is:

year,julian_day,month,day,hhmm,temperature,pressure,wind_speed,wind_direction,relative_humidity,delta_tWe need to write some code because the point data API expects geo and time referencing so the AmrcFinalQCPointFile code extracts the metadata from the header and tacks on site,lat,lon,elevation to each row (well, sortof). The API sees:

site_id,latitude,longitude,elevation,year, julian day, month, day, hhmm, ...In the AmrcFinalQCPointFile.prepareToVisit method the 2 line header is read, the georeferencing metadata is then used to define the fields as, using value="..." field attribute to insert the metadata values. This allows us to take the metadata in the header (e.g., location) and have it applied to the data records. The base point code (for now) doesn't handle the particular way of expressing time so the AmrcFinalQCPointFile code handles it in its processAfterReading method. This parses the date/time from the column values and sets the time on the Record.

fields= site_id[ type="string" value="BPT" ], latitude[ value="-64.78" ], longitude[ value="-64.07" ], elevation[ value=" 8" ], year[ ], julian_Day[ ], month[ ], day[ ], time[ type="string" ], temperature[ unit="Celsius" chartable="true" ], pressure[ unit="hPa" chartable="true" ], wind_speed[ unit="m/s" chartable="true" ], wind_direction[ unit="degrees" ], relative_humidity[ unit="%" chartable="true" ],

LAT,LON,TIME,THICK,ELEVATION,FRAME,SURFACE,BOTTOM,QUALITY 76.807589,-48.918178,48974.2143,-9999.00,4158.4286,2007091001001, -5.87,-9999.00,0 76.807579,-48.917978,48974.2504,-9999.00,4158.5008,2007091001001, -4.63,-9999.00,0 76.807569,-48.917778,48974.2865,-9999.00,4158.5731,2007091001001, -3.40,-9999.00,0To provide support for this data format we need to create 2 classes- McordsIrmcr2File and McordsIrmcr2Record. The basic structure is that the "File" classes are what get insantiated and can do some initialization (e.g., read the header) and create a Record class that is used to read and store the values for one line or record of data.

tclsh definerecords.tclThis script generates a self-contained McordsIrmcr2File class. This class contains a generated McordsIrmcr2Record class that does the actual reading. This code is generated by the generateRecordClass procedure defined in ../..record/generate.tcl. The following arguments are used

| org.ramadda.data.point.icebridge.McordsIrmcr2Record | Generate this Java class |

| -lineoriented 1 | This is a text line oriented file, not a binary file |

| -delimiter {,} | Comma delimited |

| -skiplines 1 | skip the first line in the text file. It is a header |

| -makefile 1 | Normally, generateRecordClass generates just a Record class. This says to actually make a McordIrmcr2File class that contains the Record class. This makes the reader self contained |

| -filesuper org.ramadda.data.point.text.TextFile | This is the super class of the file class |

| -super org.unavco.data.lidar.LidarRecord | This is the super class of the record |

| {latitude double -declare 0} | Define a field called latitude of type double. The -declare says to not declare the latitude attribute in the Record class. This uses the latitude attribute of the base PointRecord class. Look at AtmIceSSNRecord in definerecords.tcl to see how to overwrite the getLatitude methods |

| {longitude double -declare 0} | |

| {time double} | |

| {thickness double -missing "-9999.0" -chartable true } | Specify a missing value and set the chartable flag. The chartable is used by RAMADDA to determine what fields are chartable. |

| {altitude double -chartable true -declare 0} | This uses the altitude attribute of the base class. |

| {frame int} | |

| {surface double -chartable true -missing "-9999.0"} | |

| {bottom double -chartable true -missing "-9999.0"} | |

| {quality int -chartable true } |

java org.ramadda.data.point.icebridge.McordIrmcr2File <data file>To use the file reader within RAMADDA one has to add a new RAMADDA entry type in a plugin. The main RAMADDA point plugin is located here:

src/org/ramadda/geodata/point/icebridgetypes.xmlIn icebridgetypes.xml is the entry definition for the Mcords file type. This specifies a record.file.class property that is used to instantiate the file reader.

<type

name="type_point_icebridge_mccords_irmcr2"

description="McCords Irmcr2 Data"

handler="org.ramadda.data.services.PointTypeHandler"

super="type_point_icebridge" >

<property name="icon" value="/point/nasa.png"/>

<property name="record.file.class" value="org.ramadda.data.point.icebridge.McordsIrmcr2File"/>

</type>

generateRecordClass org.unavco.data.lidar.icebridge.QFit10WordRecord

-super org.unavco.data.lidar.icebridge.QfitRecord -fields {

{ relativeTime int -declare 0}

{ laserLatitude int -declare 0}

{ laserLongitude int -declare 0}

{ elevation int -declare 0 -unit mm}

{ startSignalStrength int }

{ reflectedSignalStrength int }

{ azimuth int -unit millidegree}

{ pitch int -unit millidegree}

{ roll int -unit millidegree}

{ gpsTime int }

}

The records all have some common fields - relativeTime, latitude, longitude and elevation.

These fields have various scaling factors.

We declare those fields in the base (hand written) QfitRecord class and that class in turn implements the

getLatitude, getLongitude, etc., methods, scaling the integer values accordingly.

The QfitFile is not generated. It handles the logic of determining what record format the file is in, its endianness and pulls out

the base date from the file name.

| Text Point Data |

| Point Data Access API |